Don’t look. Or, more accurately, you can’t look. You wouldn’t be able to see anything if you tried.

A few banalities to start us off: Artificial intelligence (AI), specifically machine learning systems, is increasingly being used to make high-stakes medical decisions. This includes decisions regarding diagnoses, surgical outcome predictions, technical skill evaluation, and disease risk scores. Despite the technology’s pervasiveness – and use in increasingly critical roles – little is known (even to its makers) about how AI makes decisions. Together, these all have enormous ethical implications for those subject to these decisions.

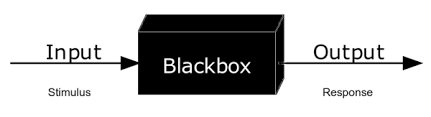

Medical care involves decision-making by a number of actors: the patient, their primary care physician, specialists, pharmacists, and almost always an insurance provider. But what happens when a machine learning system is introduced into this team? Recent applications of AI tools in healthcare are altering the scope of medical decision-making. These systems have already proven to make quicker and more accurate diagnoses than their human decision-making counterparts, showing exceptional promise for tailored patient recommendations and outcome predictions. Despite these advancements, the increased use of these systems in healthcare has come under scrutiny. Many of these AI systems are black boxes, meaning the mechanisms by which they generate information are uninterpretable to most humans.

Careful consideration regarding the role of algorithmic systems in medical decision-making is essential to their ethical use, as many of these technologies can increase discrimination in healthcare settings. Further, scholars widely debate the morality of using AI in these settings. Who can be held responsible for an AI-generated decision? Does AI challenge the epistemic authority of medical professionals? Should there be a special degree of explanation owed to decision subjects as moral beings? Thus far, arguments have largely focused on best bias mitigation techniques. However, these alone may be insufficient to capture the host of ethical and epistemic quandaries that accompany AI medical decision-making.

A study conducted by Matthew Groh, an assistant professor at the Northwestern University Kellogg School of Management, tracked the diagnostic accuracy of dermatologists, primary care physicians, and machine learning models through an analysis of 364 images spanning 46 skin conditions. The results reported that while the machine learning models had a 33% higher rate of diagnostic accuracy, it exacerbated the already large accuracy gap across skintones.

“The results reported that while the machine learning models had a 33% higher rate of diagnostic accuracy, it exacerbated the already large accuracy gap across skintones.”

Many thinkers attempting to develop guidelines for the ethical usage of AI in decision-making support the development of “fairness metrics” to mitigate bias in data and correct decision distribution among different groups. There are numerous points within the development, training, and deployment of these models where bias can appear. Some argue that treating this as a trivial technical problem is insufficient, and greater efforts should be made to understand the complexity and morality of today’s socio-technological environment. Data is a mere representation of external systems and conditions. Because these models are trained on real-world data sets, the biases in our social, political, and economic infrastructure influence their decisions, either directly or indirectly. Trishan Panch, a co-author of a study examining the effects of AI bias on health systems, told the Harvard T.H Chan School of Public Health that “there will probably always be some amount of bias because the inequities that underpin bias are in society already and influence who gets the chance to build algorithms and for what purpose.”

Since the mechanisms by which these systems make decisions are not fully understood (or are buried beneath the opaque surface), these biases are especially challenging to address. Nonetheless, just because something cannot be seen, doesn’t mean that it’s not there. While the opacity of these systems masks the correlates used to produce a decision, the factors that lead to systemic bias are quite clear. So, to best mitigate algorithmic bias, the social conditions that lead to this must too be addressed.

The ability of algorithmic systems to operate at a scale that contributes to systemic discrimination and injustice is reason enough to tread carefully as these technologies become more widespread. However, it is certainly worth theorizing about how these systems might be used to address the bias that frequently undermines them. Healthcare and society would be remiss not to acknowledge and even look for the glint of opportunity potentiated by these powerful systems.

“A potential path forward? Panch thinks this ‘will require normative action and collaboration between the private sector, government, academia, and civil society.’ “

A potential path forward? Panch thinks this “will require normative action and collaboration between the private sector, government, academia, and civil society.” There is a unique opportunity to take preemptive action in creating ethical and regulatory guidelines for the widespread use of this technology. Further, understanding the moral underpinnings of implementing these into vastly interconnected institutions can better inform policymakers’ regulatory goals and our own relationship with AI. Ultimately though, rather than looking for a flicker in the darkness to address these ethical dilemmas, one must look at the bigger picture. These systems are a reflection of our societal values, and the healthcare field cannot address complex and intersectional social phenomena alone. Collective action by social, legal, and political entities is necessary to work towards a more equitable environment, especially in healthcare. Only then can healthcare move forward intentionally and responsibly with this technology.

- Hastings Center Report (2019): 10.1002/hast.973

- Journal of Medical Ethics (2020): 10.1136/medethics-2019-105586

- Nature Machine Intelligence (2019): 10.1038/s42256-019-0048-x

- Nature Medicine (2024): 10.1038/s41591-023-02728-3

- npj Digital Medicine (2023): 10.1038/s41746-023-00858-z

- Springer Link (2023): 10.1007/s11098-023-02013-6

- Philosophy Compass (2021): DOI: 10.1111/phc3.12760

- Springer Nature (2022): DOI: 10.1007/s00146-022-01614-9